Fat protocols aren’t new: What blockchain can learn from p2p file sharing

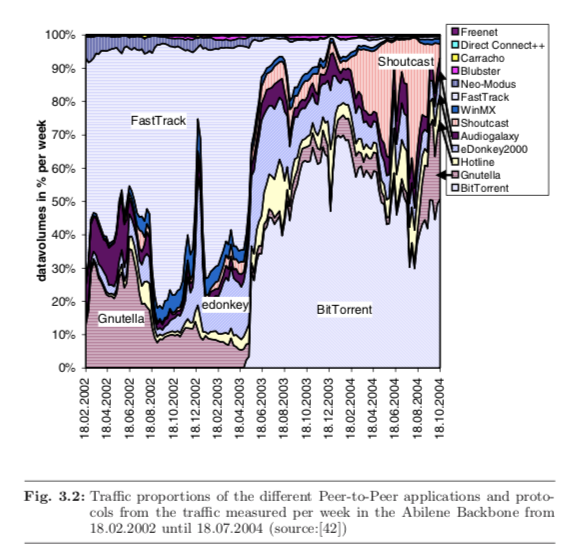

Starting in 1999, we saw an explosion of p2p file sharing technology. We had Gnutella (the protocol behind Limewire), FastTrack (Kazaa), eDonkey, and BitTorrent. Limewire, Kazaa, and eDonkey all died, but the protocols they helped create lived on!

People have speculated on what a future with fat protocols will look like. File sharing protocols aren’t exactly the same, but they have a lot of similarities we can learn from.

Looking at the p2p ecosystem, a few things are clear:

- Fat protocol ecosystems have way more variety and experimentation when network effects don’t stop people from creating new clients

- Protocols live on and evolve regardless of whether the original creators are working on them or not

- The application layer seems to capture little value for the protocol creator, but third party competition to build on top of the protocol may be a powerful force for finding product/market fit

- While people weren’t able to figure out how to integrate a token into p2p file sharing technologies back in the day, it does seem like a properly calibrated token marketplace would have solved a lot of problems and accelerated p2p’s breakneck adoption even more.

Vibrant and competitive ecosystems

… by replicating and storing user data across an open and decentralized network rather than individual applications controlling access to disparate silos of information, we reduce the barriers to entry for new players and create a more vibrant and competitive ecosystem of products and services on top.

Fat Protocols from Union Square Ventures

What file sharing application did you use before BitTorrent? Was it LimeWire, BearShare, Shareaza, giFT, Morpheus, Phex, or Acquisition? All of those were separate applications made by different teams that all connected to Gnutella network! There was also FrostWire, LimeWire Pirate Edition, and WireShare which were forks of Limewire’s client.

I’m not going to do the same song and dance here listing a dozen clients for FastTrack and eDonkey, but I could. Every protocol had tons of clients. In fact, Limewire wasn’t the creator of the Gnutella protocol, their app was just really popular because they added a lot of features.

Features

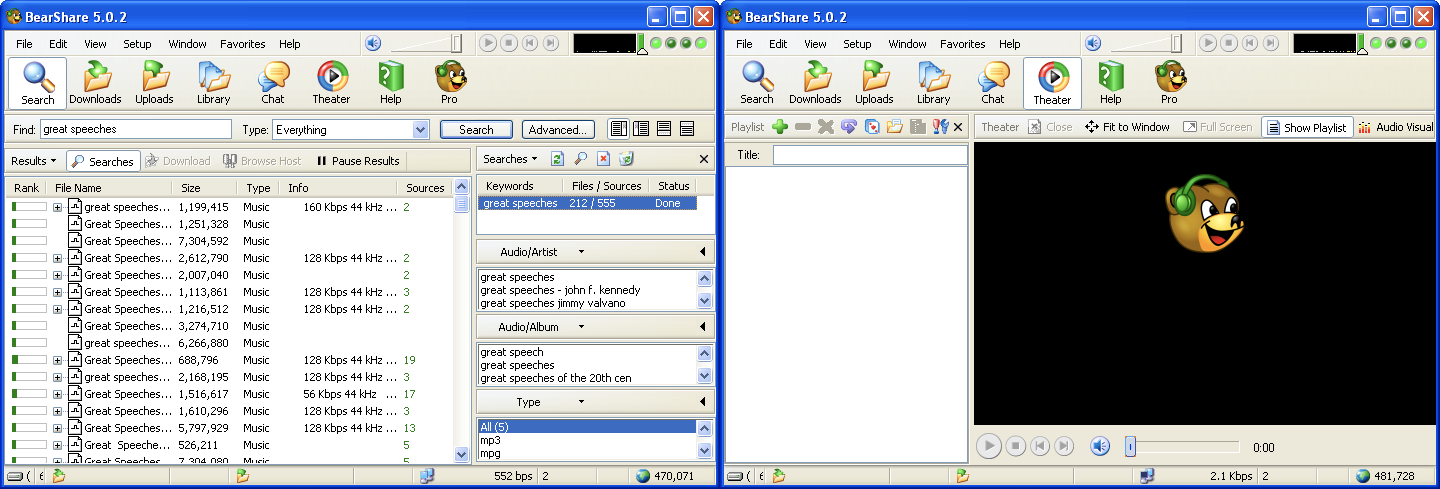

People didn’t all just build near identical clients of course. Different companies competed to build clients to the Gnutella protocol that served different needs. BearShare seemed to focus on building a good client. It had a straightforward search, a “theater” to preview movies and music while they downloaded, and plenty of other features like a place to chat with other users.

Quality

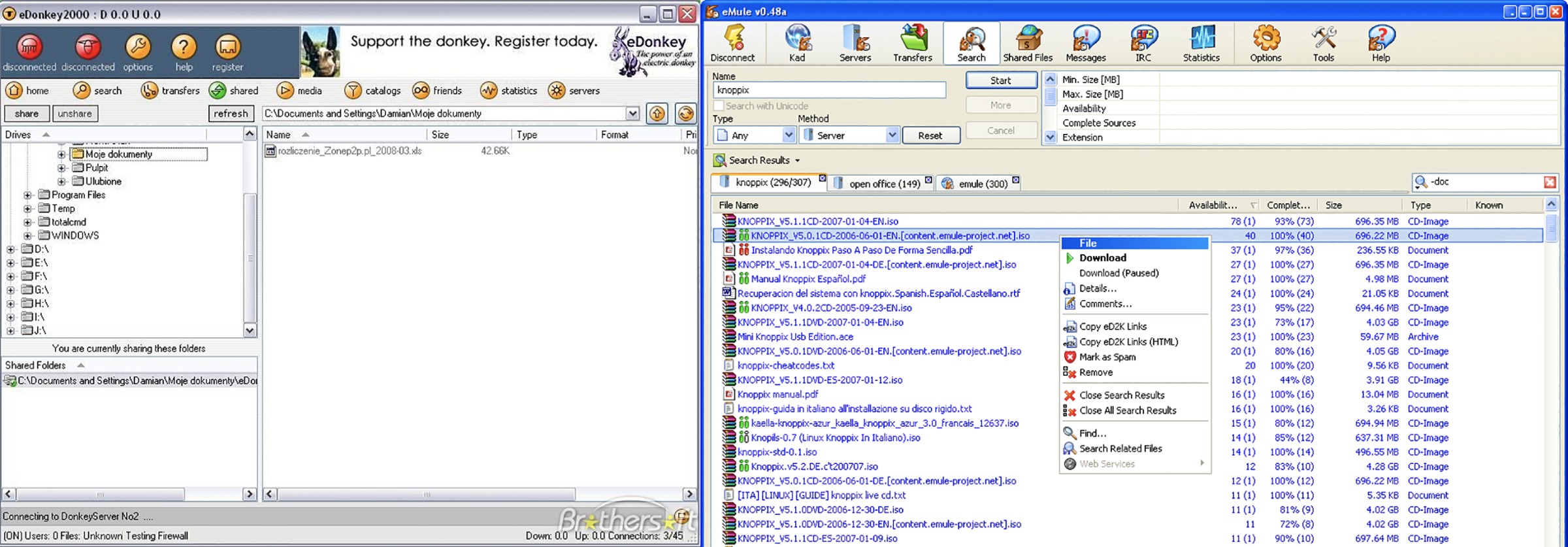

The eDonkey company developed their own network (“eDonkey2000 Network”) and a corresponding client. Even though they developed the protocol, they had to compete with a very popular open source client called eMule which many viewed as having a better user interface!

Generalization

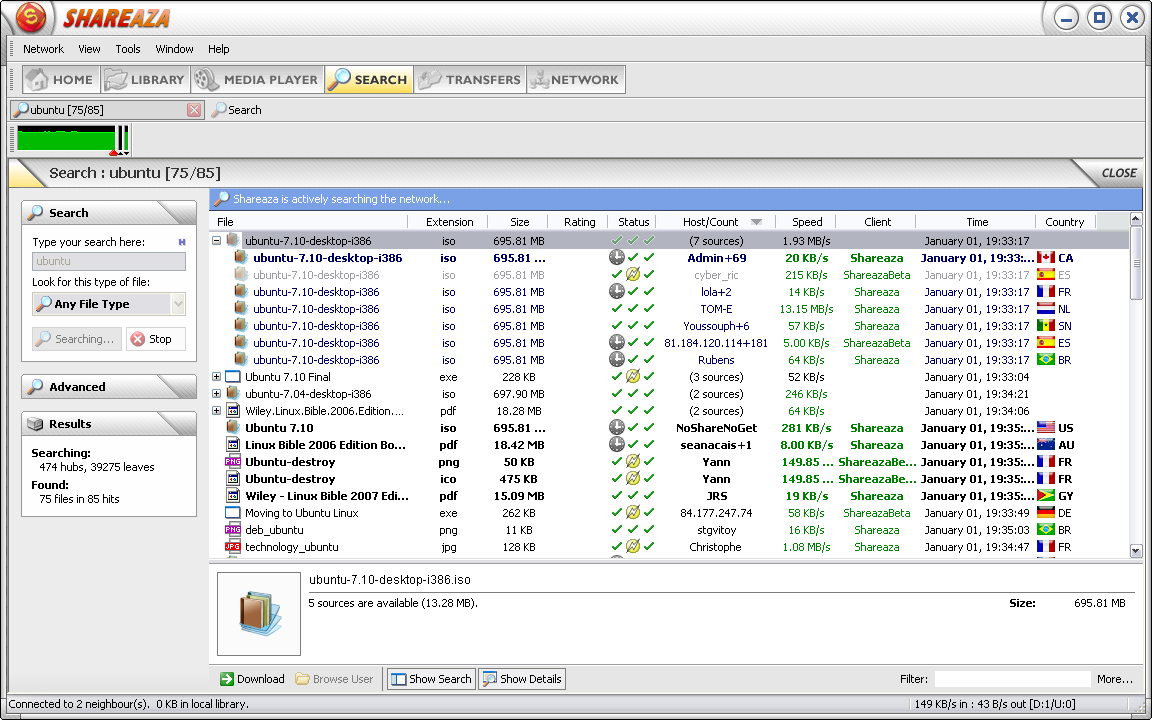

Some people tried improving the user experience via better interfaces. Others tried to provide access to as much content as possible by creating clients that used multiple networks.

Shareaza became popular by supporting every file sharing protocol under the sun:

Shareaza … supports the Gnutella, Gnutella2, eDonkey, BitTorrent, FTP, HTTP and HTTPS network protocols and handles magnet links, ed2k links, and the now deprecated gnutella and Piolet links

Abstraction

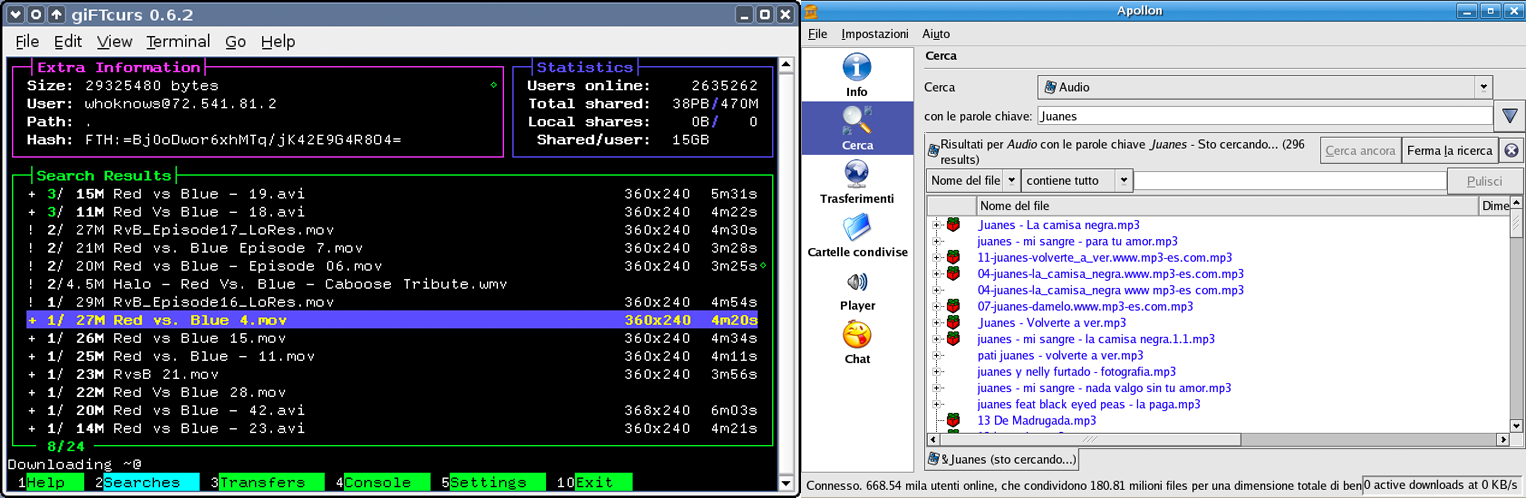

The giFT project was similar to Shareaza in trying to implement every protocol, but it aimed to be a file sharing backend that others could build user interfaces for:

giFT supported most protocols and was compatible with Windows, Linux, and Mac.

There were hundreds of third party applications for interfacing with the fat protocols of the file sharing world. Both open source communities and independent for-profit companies leaped on the opportunity of providing what they thought end users wanted. These applications are not similar to third party Twitter clients; these clients can do anything the protocol supports and the protocol creators can’t stop them.

A potential takeaway here is that fat protocols split product/market fit into two distinct parts:

- Protocol/market fit: is the protocol powerful and general enough to let applications give users a good experience?

- Application/consumer fit: does the application optimize how the end user wants to use the protocol?

You can’t just ship a protocol and call it a day. eDonkey’s client popularized their network and Limewire popularized Gnutella. Once the protocol has network effects though, it is anyone’s ballgame to build a better interface and steal away users of the original frontend.

Competitors don’t have to totally reenvision the frontend, they can just subtract annoying things like ads; that is what Kazaa Lite, Frostwire, WireShare, and many others did. The incentives for app/consumer fit and protocol/market fit are different. If the protocol doesn’t protect against prisoner’s dilemma incentive issues, applications can differentiate by acting selfishly. Kazaa Lite and GreedyTorrent both help you lie to the network about how much you’ve uploaded.

A core tenet of the Y Combinator playbook for startups is to talk to your users. If you’re interested in building a third party app on top of a fat protocol, the lesson might be to also talk to competing apps’ users to figure out what needs aren’t being served. In a similar vein, protocol developers should talk to app developers and learn what they think end users want.

Are thin applications less valuable?

This relationship between protocols and applications is reversed in the blockchain application stack. Value concentrates at the shared protocol layer and only a fraction of that value is distributed along at the applications layer.

Fat Protocols from Union Square Ventures

It was very hard for the creators of fat protocols to monetize through their thin applications. Limewire, Kazaa, and eDonkey attempted to monetize their clients by installing toolbars, collecting user data, and displaying ads. Maybe these brought in some money, but it quickly resulted in competitive forks of the apps without these nuisances. If someone visits your website today with an ad blocker, you can still ask them to pay at least. If a user starts using a different frontend, you lose the ability to communicate with them entirely.

People don’t care about protocols

It might be hard for protocol creators to capture value through their own thin clients. Third party clients not created by the protocol creators do seem to provide value with time. FrostWire was originally a fork of Limewire but years later when BitTorrent became the clear p2p winner, they added BitTorrent support. Morpheus, Acquisition, MLDonkey, and many others did the same.

Application developers just care about giving their users a good experience, they aren’t loyal to a particular fat protocol. If a previously popular protocol is overthrown by a new one, a third party application developer might update to the new protocol with minimal impact to the end user.

Parallel lean startups

The lean startup methodology says that “every startup is a grand experiment that attempts to answer a question.” The key insight is that startups are all about iterating and trying out ideas until you find product market fit. Steve Blank formalizes startup iteration with this loop:

Remember, there were dozens of applications for each popular p2p fat protocol each trying their own approach. Different teams with different ideas about what people wanted, each iterating on their own designs and trying to find application/consumer fit. Finding product/market fit for traditional companies may be inherently serial while finding application/consumer fit may be embarrassingly parallel.

Specialization

While fat protocols generalize over a concept like sharing files, thin applications can specialize as much as they want. BitTorrent is for anything, but PopcornTime specializes in streaming movies:

The most private torrent community in the world exclusively shares copyrighted magic tricks. eDonkey network clients specialized around video because the eDonkey protocol was really good at dealing with big files.

We shouldn’t just think of thin applications as a vicious competition to build the best frontend for a protocol. If the fat protocol is sufficiently general, application developers can create an entirely different user experience by focusing on a particular use case.

Protocol improvements

the evolution of these protocols will be governed by the decision of those who have adopted it to adopt a future version. This has the potential to provide a much more democratic process for changing protocols over time than the historic committee process.

Crypto Tokens and the Coming Age of Protocol Innovation by Albert Wenger

There were a lot of competing protocols in the p2p space and they all evolved with time. While there weren’t token holders for these protocols, I think we can still learn a lot from protocol evolution in the p2p space.

eMule vs eDonkey

As I mentioned in the previous section, the open source eMule client gave eDonkey quite the run for its money. eMule actually went even further and basically forked the protocol.

To push the eDonkey universe closer to pure decentralization, the creators of the protocol released Overnet. Overnet was a proprietary protocol storing all file and peer information in distributed hash tables using a protocol called Kademlia.

In response, the eMule community launched the Kad Network, an open source protocol built on distributed hash tables using Kademlia! Since eMule was very popular, the Kad network may have actually been more popular than Overnet.

Separate from the Kad network, eMule introduced a credit system to try to incentivize users to share more.

Community development

Gnutella was created at AOL and it was never actually open sourced. AOL killed the Gnutella client almost immediately after the creators released it. Still, people shared the Gnutella client and reverse engineered the protocol. Every successful application built on top (Limewire, BearShare, Morpheus) was building on a protocol they didn’t originally create.

The Gnutella protocol evolved. If you look at the draft for v0.6, Limewire and BearShare (“Free Peers”) contributed a lot of new features to the protocol and the community generally worked together to create the spec.

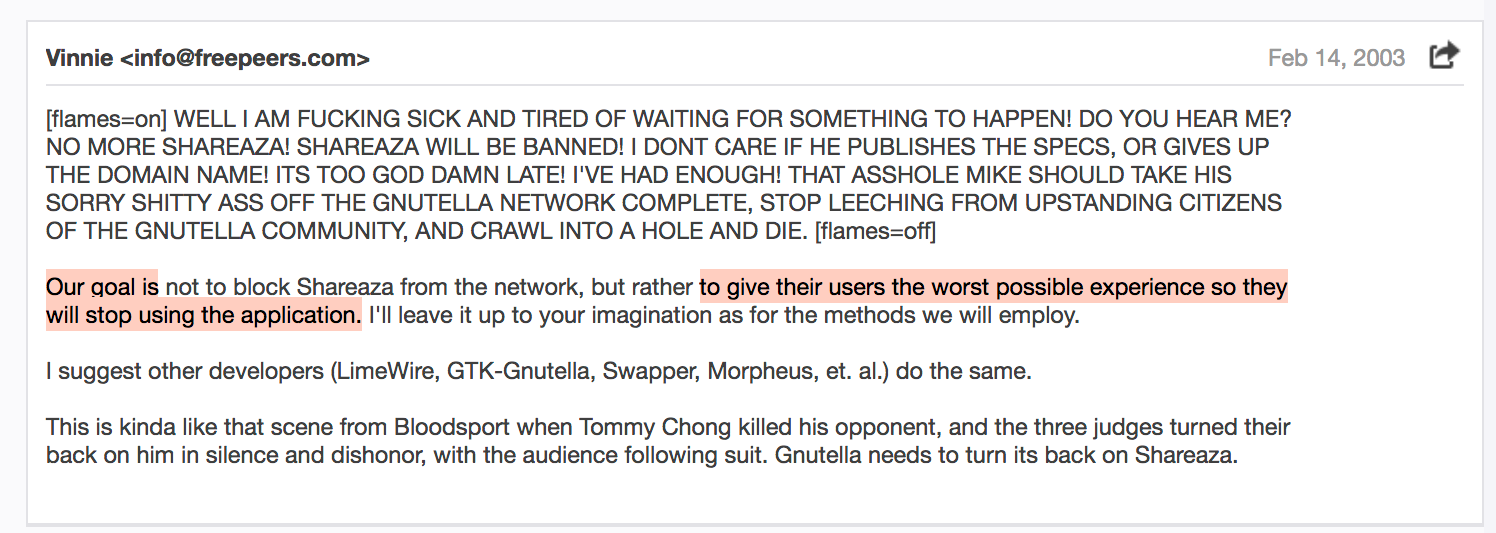

When a lone developer created a new protocol, called it Gnutella2, and tried to push it on the Gnutella development community, people freaked out:

One of the creators of BearShare said he would block Gnutella2 traffic and encouraged others to do so. Most of the community pushed back and said it should be called “Mike’s Protocol.” Not as many clients adopted Gnutella2.

Looking at the history of file sharing fat protocols, I see committee-like cooperation among the Gnutella developers who worked to develop 0.6. The eMule community essentially forked eDonkey’s protocol. People never fully reverse engineered FastTrack so I’m not sure if any third parties ever extended it. BitTorrent has BitTorrent Enhancement Proposals (BEP) just like Bitcoin has Bitcoin Improvement Proposals (BIP).

The Gnutella community makes it fairly clear that, when a lot of developers are building businesses or large open source projects on top of a protocol, they want to think carefully about protocol improvements for the sake of backwards compatibility and also enhancing their own apps.

eDonkey’s protocols were proprietary which is likely why eMule didn’t adopt Overnet. Gnutella2 was developed by the creator of Shareaza, the client which implemented every protocol. His lack of interest in peer review and desire to push Gnutella2 on everyone else bred resentment. The main lesson here seems to be straightforward:

- Protocols seem to evolve democratically in civil communities (Gnutella 0.6, BitTorrent)

- Protocols seem to fork when relationships feel adversarial (Gnutella2, Kad Network)

Incentivizing adoption

… an open network and a shared data layer alone are not enough of an incentive to promote adoption. The second component, the protocol token which is used to access the service provided by the network (transactions in the case of Bitcoin, computing power in the case of Ethereum, file storage in the case of Sia and Storj, and so on) fills that gap.

Fat Protocols from Union Square Ventures

I want to stay within the scope of what p2p protocols tell us in this post, so I can’t answer the direct question of “do tokens promote adoption?” since there wasn’t a mainstream p2p token. Instead, we’ll have to answer similar questions:

- What did adoption look like?

- Would a token have made sense?

- Did people talk about adding tokens?

- Did people actually try to add tokens to p2p protocols?

To address token related questions, we need to understand the dynamics of p2p protocols so we can understand if a token economy would make sense.

People LOVE free stuff

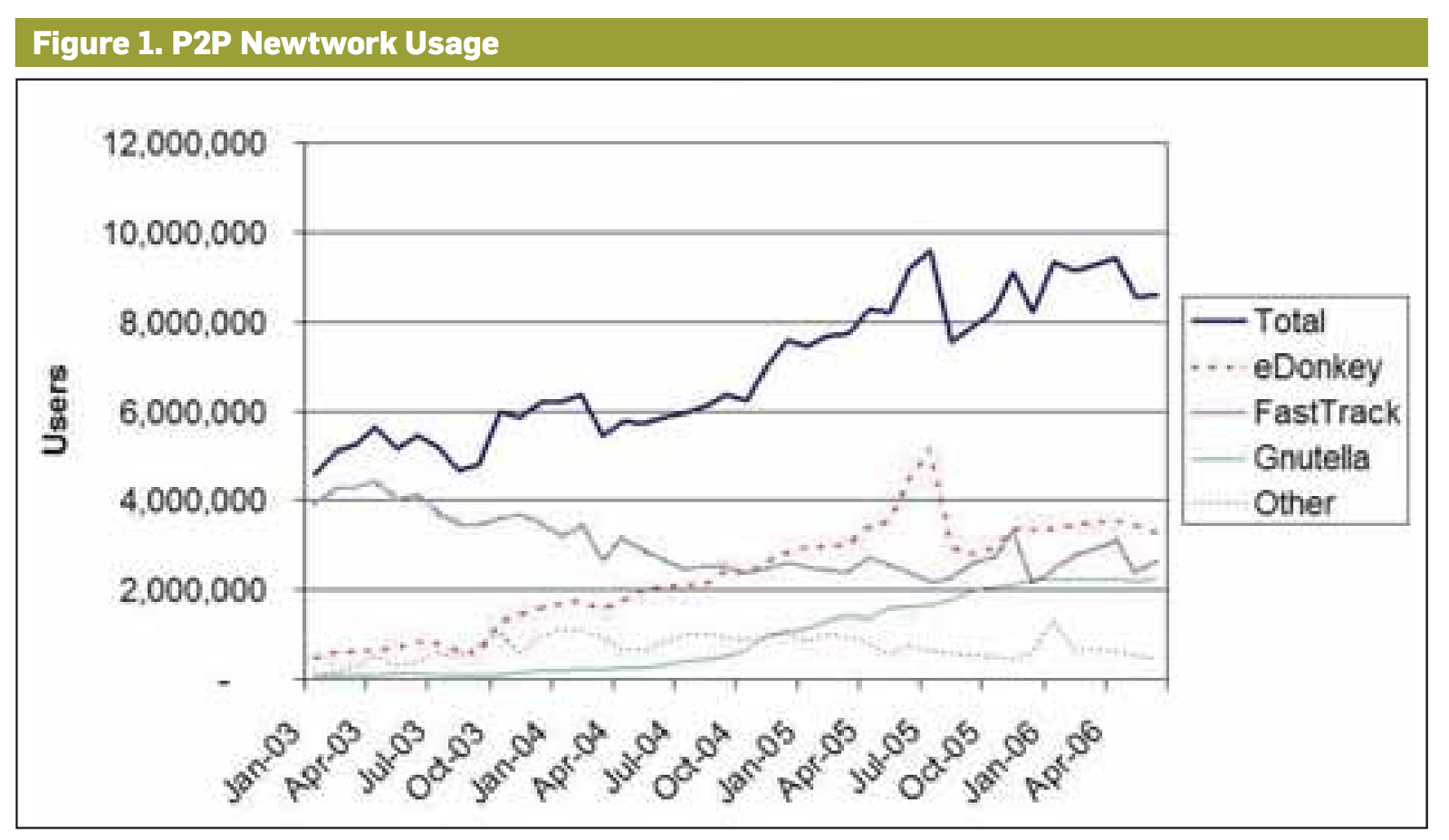

This is a boring but important point. Obviously, I think we all know that people enthusiastically adopted the fat protocols of file sharing. All networks saw huge growth despite wide spread legal threats:

I called this a boring point because, well, who doesn’t want free stuff? The important and only kind of pedantic takeaway is that people will happily adopt fat protocols if it is worth it.

Free Riders

A lot of file sharing systems have free rider problems. Most people download what they want and offer up nothing in return. From the widely cited paper “Free Riding on Gnutella”:

we found that nearly 70% of Gnutella users share no files, and nearly 50% of all responses are returned by the top 1% of sharing hosts.

So by default everyone wants to download but no one wants to share. This manifests in a few ways:

- Most users don’t share their own files with the network

- Among those that do share files, many of them don’t share desirable files

- Most users download a file and then close their client. Ideally, they would then help other users download files

Bad Files

File sharing protocols had a major problem with people sharing bad files. From the widely cited paper “The EigenTrust Algorithm for Reputation Management in P2P Networks”:

Attacks by anonymous malicious peers have been observed on today’s popular peer-to-peer networks. For example, malicious users have used these networks to introduce viruses such as theVBS.Gnutellaworm, which spreads by making a copy of itself in a peer’s Gnutella program directory, then modifying the Gnutella.ini file to allow sharing of.vbsfiles. Far more common have been inauthentic file attacks, wherein malicious peers respond to virtually any query providing “decoy files” that are tampered with or do not work

The book “Peer-to-Peer Systems and Applications” cites this problem as a reason for Kazaa dropping in usage:

in Kazaa, the amount of hardly identifiable corrupted content increased significantly due to the weakness of the used hashing algorithm (UUHASH). Thus users switched to applications like Gnutella or eDonkey, where the number of corrupted files was significantly smaller.

Bartering with Bandwidth

BitTorrent does a surprising amount of game theory under the hood. At a high level, your torrent client keeps track of who is uploading to you and tries to upload back. If a peer seems to only be downloading and not uploading, your client “chokes” them. This tit-for-tat mechanism maximizes download speeds when a torrent is new and there are more downloaders than seeders.

BitTorrent does seem to be much faster than eDonkey which also downloads from multiple peers and starts uploading once it receives pieces of a file. BitTorrent does many things to maximize download speed that other protocols don’t, but I think it is safe to say that the basic market mechanisms it introduces contribute to this speedup.

Optimizing how fast people can download a file right after it is shared is only a narrow chunk of the entire problem though. Ideally, a market would also incentivize seeding after download. There are also other market needs too though like file storage and sharing rare and desirable content.

Kazaa participation level

Kazaa added a naive reputation system (“participation level”) based on how much you gave back to the network. If you shared more, your downloads were faster! They also let people give “integrity ratings” to files they downloaded which would also enhance the uploading user’s participation level. People figured out they could just modify their client and trick the network though, so it didn’t last for long.

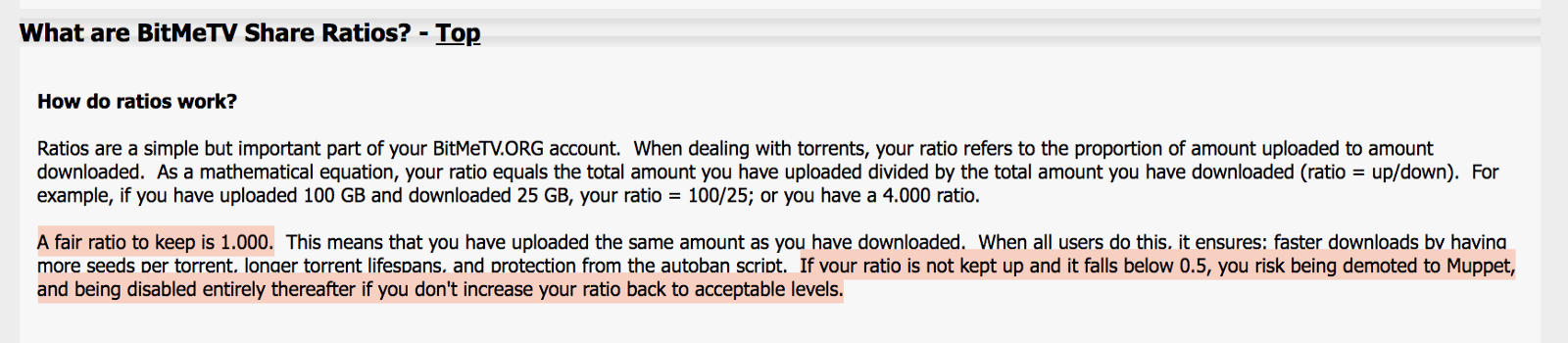

Share or else

Private BitTorrent trackers usually enforce a seeding ratio and ban you if you don’t uphold it:

This works decently well as a mechanism and even encourages some users to buy seedboxes so they can get a good ratio.

Decentralized trust

EigenTrust tried to address the bad file problem by layering a trust algorithm on top of the protocol. Basically, if Alice transfers a file to Bob, Bob can rate that transaction. If Bob trusts Alice’s files then he also likely trusts her ratings of others. This transitive trust property helps filter out a lot of malicious content in the network.

Credits

Kazaa introduced “peer points” that you earned if you shared content on the network. If you received a lot of peer points, you can trade them in for prizes.

eMule created a credit system that rewarded sharing content with another user. If Alice uploads a lot of content to Bob, they both keep a record of that and then Bob will let Alice jump to the top of his download queue if he has a file she wants.

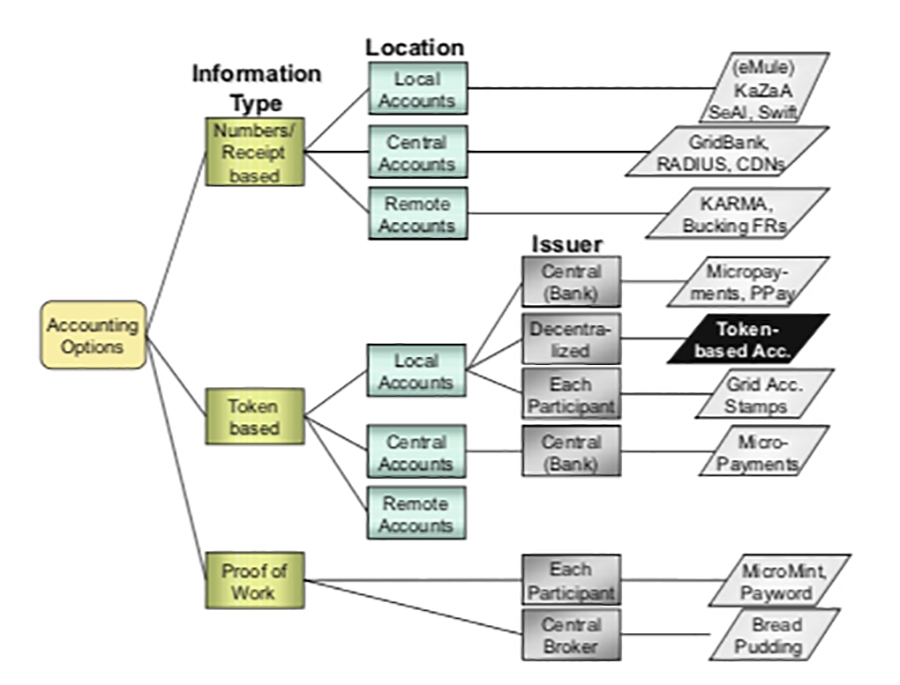

Kazaa‘s peer points and eMule’s credit system were actually used in real life p2p with some success. Researchers also proposed better systems that never made it to a real network. Swift proposed a system for tracking upload and downloads among peers and only uploading to peers with positive ratios. In the theoretical Karma network, a user’s upload/download ratio would be stored and updated by 64 random other peers in the network so that it can be reused for all global transactions.

Tokens in theory

There is a large body of research around adding markets to p2p networks. If you’re interested, read up on PPay, PeerMint, Karma, MMAPPS, and PeerMart.

The general theme of the different approaches is to provide a “secure enough” token which can be used for in-network micro-payments. A micropayment can be for different services like bandwidth, storage, forwarding requests for peers, caching information, etc. In other words, the tokens are supposed to be a generalized in-network currency that users can spend and receive in order to align incentives.

Tokens in practice

In 2000, Jim McCoy, Zooko Wilcox (founder of Zcash), and Bram Cohen (eventual creator of BitTorrent) created a protocol called MojoNation. Quoting their old website:

Mojo Nation combines the flexibility of the marketplace with a secure “swarm distribution” mechanism to go far beyond any current filesharing system — providing high-speed downloads that run from multiple peers in parallel.

Sounds like BitTorrent with a token. What does the token represent? Is it a generalized currency like some of the theoretical technologies mentioned in the previous section? Quoting Jim McCoy from his Defcon talk:

In this system, you all end up paying. With MojoNation, we keep score. We say that we will create a currency, “digital coins”, that are denominated in computational resources. So every unit of this currency we call “Mojo” represents a floating basket of goods: CPU time, disk space, bandwidth; it’s merely a means for people to say “my particular resource is worth X, yours is worth Y, lets trade.”

Mojo Nation: Building a next generation distributed data service

At the time of the Defcon talk, they even explicitly talked about being able to buy and sell Mojo with real money!

How do you get the real money in and out of the system? … we will perform that function and others can perform that function. A digital coin is a number, you can go sell it on eBay if you want it. Clear with PayPal, we don’t care. We will be a market maker in the currency which means that if no one else will buy or sell your Mojo, we will.

Mojo Nation: Building a next generation distributed data service

MojoNation didn’t end up being big like the protocols we were discussing earlier. Peter Thiel’s quote “Most businesses fail for more than one reason. So when a business fails, you often don’t learn anything” comes to mind. Still, it is worth reading a later discussion from 2007 between Nick Szabo, Zooko, and (probably) Jim McCoy: comments on “Unenumerated: Nanobarter.” While the whole conversation is interesting, this comment stands out a bit:

The smartest thing Bram did when stripping down MojoNation to create BitTorrent was conforming the digital resource mechanism to the actual behavior of the users.

Reading Zooko’s post-mortem on why MojoNation didn’t work out, he cites a few technical issues that MojoNation wasn’t able to solve that limited the adoption of the network. It seems like the main conclusion is just that MojoNation tried to boil the ocean; the product was a full token marketplace, distributed file storage, a swarming file transfer like BitTorrent, and a lot more decentralized infrastructure to stitch it all together. This was likely just too much to solve in 2000.

Gnutella had a free rider problem. Kazaa had a corrupted file problem. Every network wanted to better incentivize uploading. These tragedy of the commons problems seem like exactly what you would expect in a market that doesn’t allocate resources appropriately. People experimented with tit-for-tat game theory, issuing p2p credits specifically tied to upload, and a full blown token marketplace.

The resolution of the file sharing wars, in terms of market mechanisms, seems like a case of “worse is better” to me. I think BitTorrent ended up winning for legal reasons, not because it had the right amount of market dynamics, but I’ll save that for another blog post. BitTorrent’s tit-for-tat strategy is very smart, but it seems like a lot of other market dynamics just weren’t solved during this period.

File sharing protocols took off because everyone loves free stuff. Imagine if you could earn tokens for sharing a pre-released song from a popular artist. Consider how much better these networks would have been if people had to share some of their bandwidth and hard drive space in order to get their hands on a song or movie they can’t get elsewhere. I think file sharing protocols took off despite the market mechanisms not being solved.

All of these market mechanics didn’t happen by accident. It seems like fat protocols involving any kind of resource allocation would benefit from adding an in-network currency. The fact that BitTorrent rose from the ashes of MojoNation shouldn’t be taken lightly either. It is legitimately harder to add a full on marketplace to a technology compared to solving the incentive problem for a targeted use case.

Keep in mind that all of these experiments were happening ~15 years ago. Almost every research paper trying to solve these problems noted it wasn’t fully secure and had issues when it came to the Byzantine general’s problem. We’ve made a lot of progress since then; the full potential many people saw at the time might be possible today.

If you liked this post, follow me on twitter. I’ll be writing more on p2p and blockchain.

Thanks to Alain Meier, Devon Zuegel, Brandon Arvanaghi, Ryan Hoover, Andy Bromberg, and Daniel Gollahon for reading early drafts of this post.